insights

Certifying the Unpredictable: Ensuring safe AI Implementation in Transportation Systems

Introduction

AI has become practically ubiquitous in the last few years, and its implications have been felt across a wide range of industries and disciplines.

With the promise of better efficiency, as well as faster and smarter analysis and decision-making, the introduction of AI in the transportation industry poses challenges we have never encountered before. Experts are considering how we can reap the benefits of AI while simultaneously ensuring the accuracy, quality, and safety of integrating AI in public transportation infrastructure systems and data.

One of the main challenges of introducing AI into transportation infrastructure is how do you certify these technologies when they are in a constant state of change? In other words, how do you certify a moving target?

Determinism is the Foundation of Safety Standards

Safety standards rely on determinism at their fundamental core; given the same set of inputs, the system behaves the same way every time. There must be a consistency to the behaviour.

For example, when energy is removed from a relay, it drops and makes on the back contacts (with the signal reverting either to either restrictive or dark). This will happen every time, therefore, it can be certified for safety.

With the introduction of software-based relays, it was more challenging to ensure that the software behaves the same way every time, which is why standards like CENELEC 50128 were introduced. CENELEC 50128 is a certification standard issued by the European Committee for Electrotechnical Standardization which specifies the process and technical requirements for the development of software for programmable electronic systems for use in railway control and protection applications. Together with EN 50126 and EN 50129, the EN 50128 standard defines certain objectives in terms of Reliability, Availability, Maintainability and Safety.

Then along comes AI.

AI’s nature of extrapolating beyond set data points challenges this model of determinism, making it difficult to ensure a consistent and predetermined behaviour.

How do we ensure that AI components meet the standards of Reliability, Availability, Maintainability and Safety necessary to build, operate, and maintain a public transportation system?

The Problem with AI

New Technology Presents New Opportunities

True AI, by definition, won’t fit into the deterministic model necessary for safety certification.

When plotting two points on a graph, one could create a line of best fit to determine the equation for that line.

Once you have the equation, you could interpolate between the two end points and have a good guess of anything that lies in between those two extremes. That’s interpolating.

Extrapolating is going beyond the endpoints and trying to predict what happens outside of your range of data. And any mathematician will tell you don’t do that because the curve might behave in completely unpredictable ways.

Interpolating is fairly safe, extrapolating not so much.

AI extrapolates beyond set data points. It just “learns” or more accurately, takes what you’ve trained it with, and then it starts trying different things, evaluates the success or failures of those attempts, and carries on trying new things, all without telling you anything about what it’s doing. In doing so, AI tries to expand beyond those outer points, testing the behaviour and by extrapolating, determining what configuration meets the intended outcome.

The moment we push “start” on a true AI system, it’s going to start learning and modifying its own behaviour to expand the extrapolation and evolve itself and its data. That’s akin to software code that rewrites itself.

That is very different than software, as software has determinism because the code is set or “frozen.” It doesn’t change. We have very specific versions of software with this frozen state, which allows us to certify the software, and the data is uses, for safety.

How do you certify something when it’s in constant motion?

The short answer is, we don’t actually know yet. There are a lot of good theories, but no concrete methodology of certification of AI yet. It is entirely likely that the future of safety certification necessarily involves a paradigm shift away from the comfort of determinism.

AI isn’t coming at some distant time in the future, it’s already here.

Organizations would be much better off taking a proactive approach in ensuring AI integration or implementation is safe and secure.

That is a great challenge that is difficult to navigate. This is because new technologies introduce new issues that impact other elements in the system, causing a domino effect of impact. There is a great time and cost investment required to solve the challenges of safely integrating AI into any infrastructure system. There are also macro-environmental challenges.

New Technologies are in a competitive race to be “first to market”

Organizations are eager to be the first to market when introducing new technologies. And with that eagerness, comes a tendency to minimize the importance of safety, which can lead to catastrophic results.

We just witnessed a recent example of this behaviour by Stockton Rush, the CEO of OceanGate Expeditions, which operated the Titan submersible bound for the Titanic, and who tragically died in the implosion along with the other passengers. In Rush’s race to implement new submersible technology, he dismissed the usefulness of safety regulations which had deadly consequences.

Emergent Properties of New Technology

Emergent properties are “unexpected behaviours that stem from interaction between the components of an application and their environment” (Johnson, 2006).

When you start putting elements together, an emergent behaviour arises and the more complicated the elements, the system, and the interactions between the elements, the more emergent behaviours develop. Sometimes emergent properties are desirable, and other times they are undesirable, but in both cases, they need to be accounted for.

Software-based Signalling and Relay-based Signalling

Software-based signalling was introduced to increase efficiency by minimizing the number of relays used in a relay-based signalling system and increasing the flexibility of the system by minimizing the physical wiring needed for a relay-only based system (by moving the wiring into logic-based software). One drawback is that software-based signalling needs more processing power.

When software-based signalling was introduced, it gave rise to an emergent behaviour of race conditions. A race condition is “the condition of an electronics, software, or other system where the system’s substantive behaviour is dependent on the sequence or timing of other uncontrollable events.” (Wikepedia)

Software-based signalling ran alongside relay-based signalling, but they behaved differently since software is sequential; processing the first input, then the second input and so on. But if the software receives a different input, the behaviour changes on the output, which would be inconsistent. That’s a race condition. It’s a race to see which input comes in first and the behaviour is different depending on which input “won the race.”

Conversely, a relay-based system can process multiple inputs simultaneously with no issue. Along with the software-based signalling system, relay-based signalling systems were still needed and operated in parallel as the last line of defence on the output.

The software safety standard dictates the process operators must follow to minimize the number of harmful emergent properties caused by the software-based signalling system. These standards establish a rigour for the design and implementation of the software coding to minimize the effects of systematic errors (i.e. non-random errors such as design errors, coding errors, compiler faults, requirements omissions, etc. resulting in over-function and under-function of the software). Through understanding and control of the over and under-function of the intended software, the taming of enigma of the emergent properties became manageable.

Ariane 5 Rocket Failure

Emergent Properties can have disastrous consequences.

Ariane flight V88 was the failed launch of the Arianespace Ariane 5 rocket, which exploded on June 4, 1996.

The launch ended in failure due to multiple errors in the software design which was carried forward from Ariane 4.

Ariane 5’s design aimed to reach orbits at higher latitudes by followed a different launch trajectory than the Ariane 4. The Ariane 5 had a higher angle-of-attack at launch, which wasn’t considered when using the same code. This triggered an overflow error, which halted the inertial reference platform. The data dump resulting from the halt of the inertial platform was misinterpreted as flight data by the flight control system. When the Ariane 5 took off and started tilting, it went beyond the expected range of the inertial platform, and it exploded. This was the emergent property and a small error in using old code led to a disastrous outcome.

As you introduce more functions, more interfaced, more complexity, more things can go wrong. Just because it worked last time doesn’t mean it’s going to work this time.

Not every new technology is risky, and so the trick here is how to identify and assess new technologies.

How to Appropriately Manage Risk

TURTLES ALL THE WAY DOWN – V MODELS WITHIN V MODELS

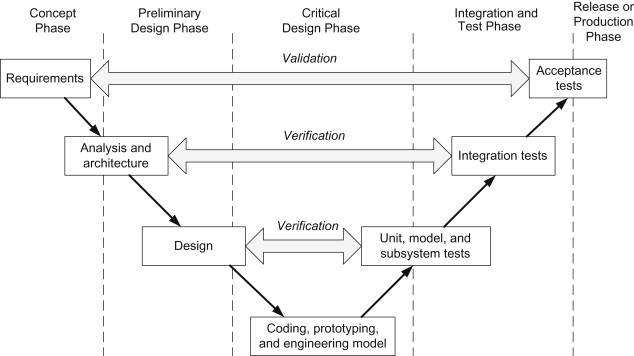

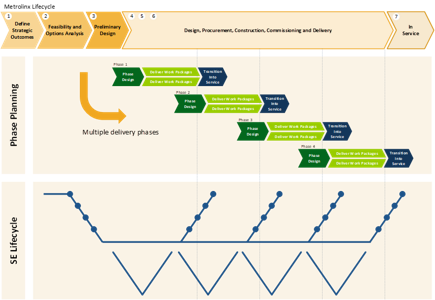

The standard V model in systems engineering flows down through design and up through integration, with many components dependent on other components in the model to function.

In a railroad infrastructure project, every component necessary to make a railway function has its own V process. Which means that in order to get a massive infrastructure project delivered successfully, there has to be V lifecycles within V lifecycles across the life of the project.

One such process is CENELEC 50126/50128/50129/50159 processes where each one of these processes must be followed and certified before moving on to the next stage of the project.

Here is a sample railway project V model project execution plan. It takes a lot of work to make these boxes in the diagram turn green.

Risk Based Assurance

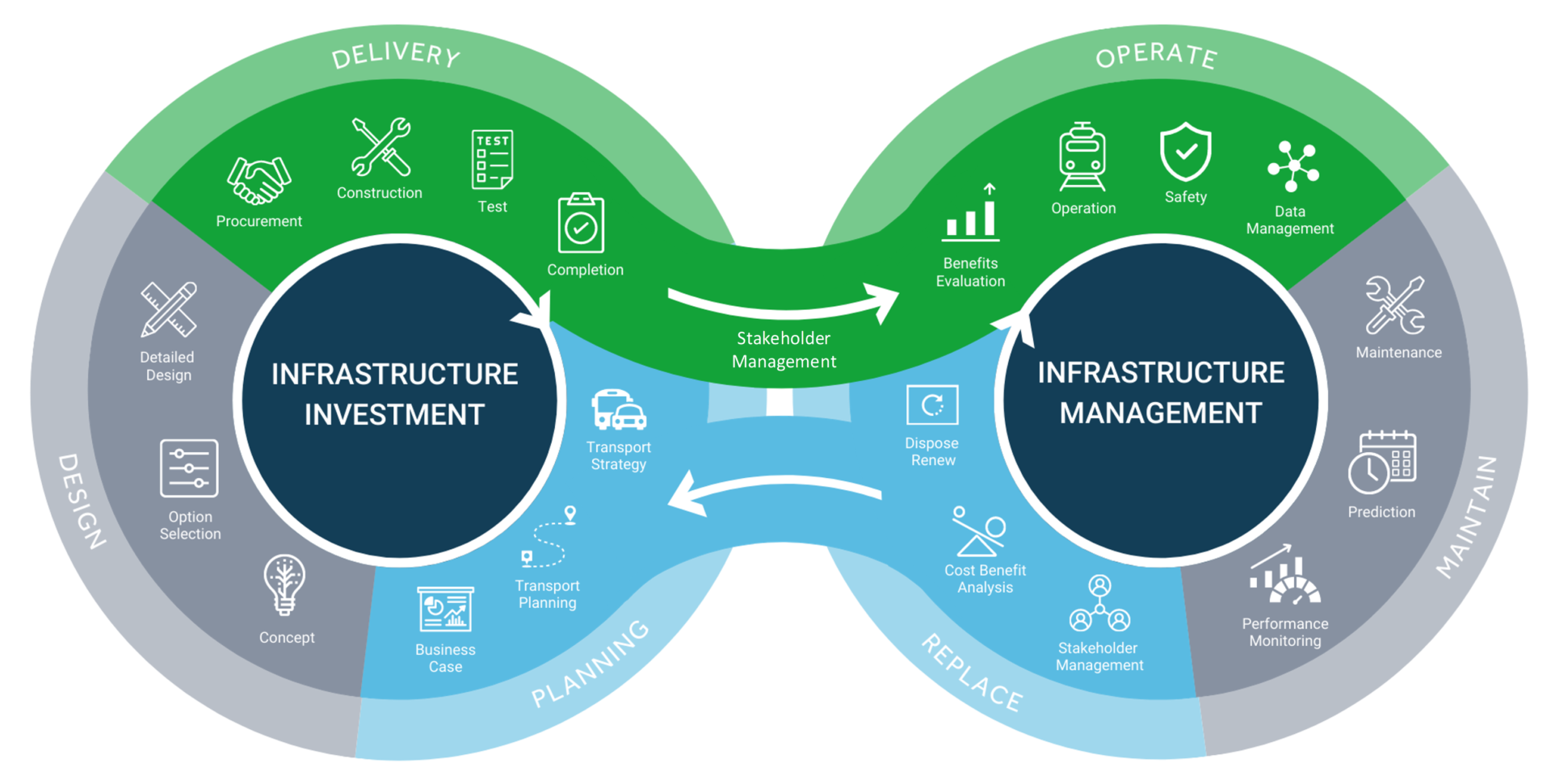

When trying to determine the level of risk assurance needed for any component or system, we need to consider that different levels of risk and complexity require different levels of oversight.

Here, we consider the business case for the new element, the concept development and eventually, its deployment.

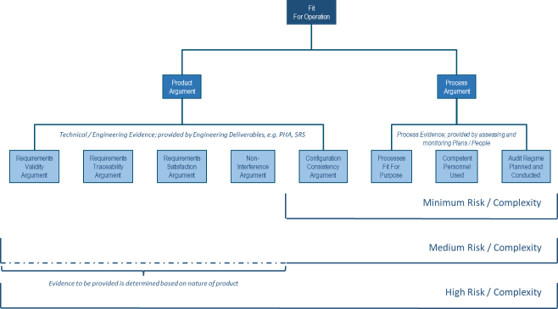

To determine whether a new component or element is fit for operation, we need to consider the product and the process assessments. The product assessment considers criteria including requirement validity, traceability, satisfaction, non-interference, and configuration consistency. The process assessment considers if the process is fit for purpose, if the personnel are qualified or knowledgeable, and how the audit regime is planned and conducted.

The higher the interconnectedness of the element within the system that perform safety-related functions, the higher the risk, and by extension, the higher the risk assessment scrutiny needs to be.

Right Sizing Certification

Right sizing certification for projects is critical. Project owners need to determine the level and priority of risk assessment needed for each element of the system. This is because project owners have a limited amount of “gold and gunpowder,” (time, money, people, process) to use on any problem or set of problems. Therefore, they cannot afford to waste resources on elements that will not add a lot of value.

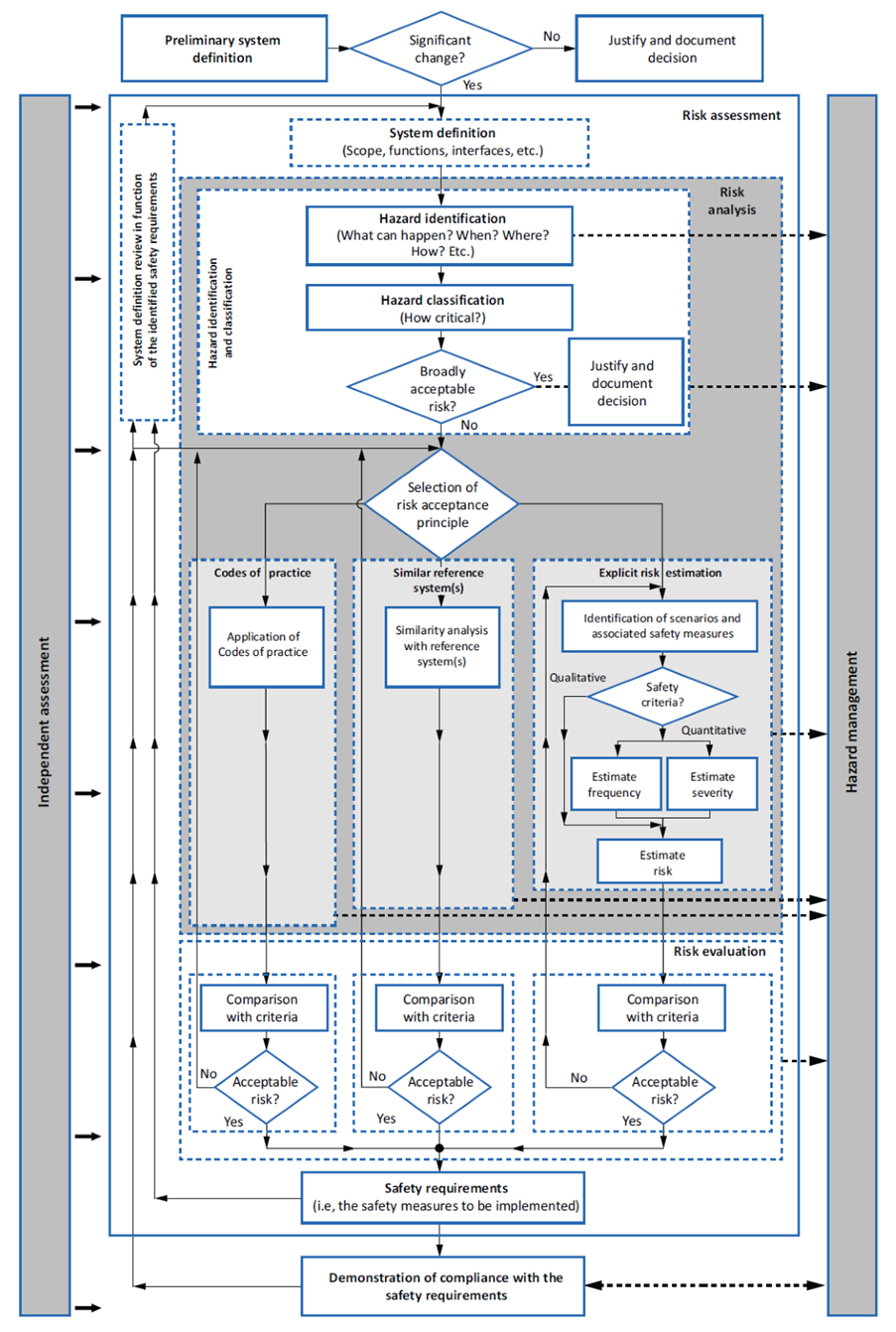

How do project owners ensure that the risk is to a satisfactorily low level? A common concept followed by EU members is to focus on the significance of change. The more significant the change (i.e. newer the element, more complex system, higher potential for harm, interrelationship with other changes, etc.), the more explicit, and detailed, the risk assessment needs to be.

When introducing new components, project owners need to determine if existing standards and codes of practice from governmental bodies would apply to that component or process.

If the new component, element, or technology is similar to existing ones, project owners must demonstrate the similarities and differences and create more detailed safety requirements around the differences.

For any component, element or technology that’s different than the existing standards, project owners need to determine the best areas to use their limited supply of “gold and gunpowder,” prioritizing the system’s safety functions.

For new elements, components, technology that do not fall under existing standards and codes of practice, project owners conduct an explicit risk estimation that studies quantitative risk evaluation, frequency, and severity assessments. Once they determine how frequently this will happen, they can create the necessary safety requirements around that.

For example, when using software-based signalling, all the safety functions get compartmentalized into as few components as possible because each component must be certified separately.

This is why railway signalling systems always reuse the same hardware, core software, and computing platform it runs on, since they have already been safety certified. However, the data that drives that system must be validated every single time.

The goal is to compartmentalize the system components as much as possible, so that we reduce the number of elements that we need to certify for safety and save time, cost and resources.

A Tailored Path Forward Approach

When conducting a Risk Estimation, we have to go back to the first principle of certification: The Protection of Public, Passengers, Staff, and Property.

When assessing the impact of AI, project owners need to keep the first principle of certification in mind.

The risk estimation needs to determine the appropriate level of oversight and assurance corresponding to the level of risk when introducing new technologies.

Swiss Cheese Model of Risk Mitigation

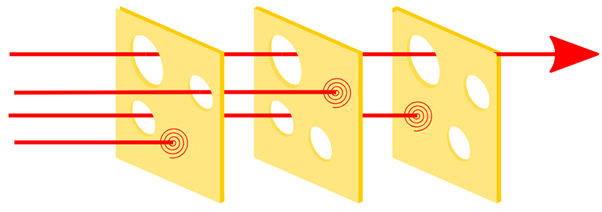

In safety engineering, we always strive to have multiple layers of defense to ensure safety.

Imagine multiple layers of Swiss cheese aligned. Those represent the multiple layers of safety defense. No one layer is perfect as the world changes.

As these layers of Swiss cheese are moving around, the goal of safety engineering is to ensure that we have enough layers of defense that prevent an accident. In the image below you can see three hazard vectors that were stopped by the layers of defense, but one passes through where the holes lined up, giving rise to the potential for an accident.

Baby Steps in AI implementation

Given all we discussed on safety risks and assurance, how can we implement AI in transportation infrastructure design operations while ensuring safety and risk assurance of the operations?

To start, AI shouldn’t be implemented within safety critical systems in the early stages. It’s recommended that project owners start with AI implementation in non-safety critical components, those with medium to minimal risk, to gain insight into AI and adjust the system accordingly, before going into safety critical applications.

We had computers for years before we decided to try and use them on railroads. That’s the lesson to draw here from our history, resisting the urge to be the first one out with new technology, before ensuring it is safe and secure.

We are already seeing non-critical AI implementations being integrated in transportation infrastructure such as sensor vision systems, which was recently been piloted on the New York City Transit.

Other examples include remote diagnostics, predictive maintenance, automated track inspection, and demand-based route planning and scheduling that are being introduced in the rail industry.

The Good News

There are some positive conditions in railway transportation that are conducive to piloting new technologies. Since railway transportation has a dedicated right of way, there is a controlled environment, that driverless cars on the highway don’t have for example.

Railway also has access to high energy sources, which means the energy impact (i.e. computational power load) of implementing new technologies would be more easily accommodated.

The important thing is to start with non-safety critical applications and compartmentalize the AI elements so that it has limited interconnectedness with the rest of the system, especially the safety-critical elements to ensure safety and security.

Citations

https://www.dcs.gla.ac.uk/~johnson/papers/RESS/Complexity_Emergence_Editorial.pdf

https://en.wikipedia.org/wiki/Ariane_flight_V88

https://www.sciencedirect.com/topics/engineering/v-model